Hand-Tracking Developments

Article by: Jordan Murphy

Virtual Reality has pushed the boundaries in the immersive field since it started, and one of the newest features of this is Hand-Tracking. Hand-Tracking for the Oculus Quest is still in the beta version at the current time; however, Facebook have revealed their new iteration that they are working on. Their high-fidelity hand-tracking is on Facebook’s research page, a publication called Constraining Dense Hand Surface Tracking with Elasticity.

Hand-Tracking works by using the inside-out cameras that are a part of the Oculus Quest’s hardware. The headset detects the position and orientation of the user’s hands and tracks the configuration of the users’ fingers. Once the headset detects them, the computer vision algorithms are used to track the movement and orientation of the user’s hands.

Facebook Reality Labs

Facebook Reality Labs (FRL) journey to create more natural devices started with the Touch controllers. The Touch controllers for the Oculus Quest are comfortable to hold and contain sophisticated sensors. They deliver a life like hand presence and make the most basic interactions in VR feel like the user is doing that action. As close to the real thing that the Touch controllers can get, they cannot replicate the expressiveness of hand signs or the act of typing.

On the 1st of December 2020, FRL published a paper with an accompanying video that showed their new research and technology allowing them to track the human hand in real time. With Facebook’s interest in VR through Oculus, it is not surprising to see them working on a better VR environment that is closer to human interaction. The efforts made to create the hand-tracking system for the Oculus Quest had an issue with hand overlap, which is the core of the goal of FRL’s latest research. The research focused on solving the big problem of losing tracking when hands overlap, touch, or fingers are self-occluded (Hexus, 2020).

Self-contact and hand-tracking technology

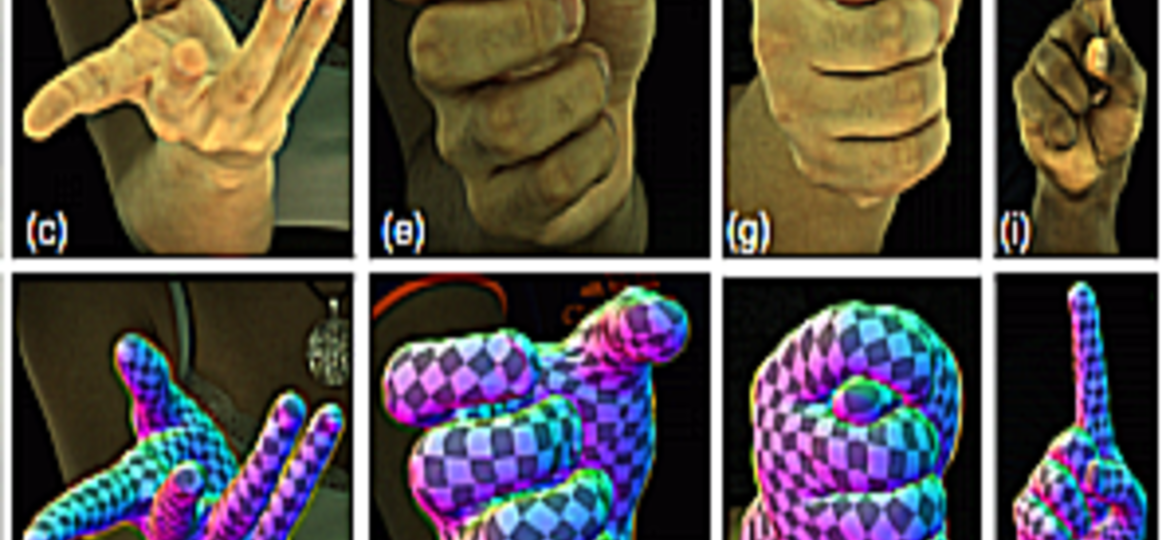

Many of the gestures and actions that people make with their hands involve self-contact and occlusion: shaking hands, making fists, or interlacing their fingers while thinking or idle. These uses illustrate why hand-tracking technology today is not designed to treat the extreme amounts of self-contact and self-occlusion exhibited by the common hand gestures.

FRL’s paper states that by extending recent advances in vision-based tracking and physically based animation, they can present the first algorithm capable of tracking high-fidelity hand deformations through highly self-contacting and self-occluding hand gestures. In the paper, they describe that by constraining a vision-based tracking algorithm with a physically based deformable model, they have obtained an algorithm that is robust enough for use in self interactions and massive self-occlusion that are displayed by the common hand gestures, allowing them to have two hands interacting with each other and some of the most difficult gestures that the human hands can make.

Despite the improvements that this paper has proven to make on the already publicly available hand-tracking technology, the method that FRL has developed has its limitations. It is computationally expensive. The method is also limited by the ability to capture high frequency folds and wrinkles. In Figure 4 of the paper, they recorded that sometimes the captured images do not portray the wrinkles on the tracked mesh. These are issues that can be worked out in future iterations, but this research shows a promising future for hand-tracking in the immersive industry.

Conclusion

At Mersus Technologies, we use Hand-Tracking on our Avatar Academy Platform. This allows us to create as close to real world experiences as possible which benefits the learner in the transfer of the knowledge and skill they gain from Avatar Academy to the real world procedures.